A Tutorial Review of Functional Connectivity Analysis Methods and Their Interpretational Pitfalls

- 1Department of Brain and Cognitive Sciences, The Picower Institute for Learning and Memory, Massachusetts Institute of Technology, Cambridge, MA, USA

- 2Neurobiology of Language Department, Max Planck Institute for Psycholinguistics, Nijmegen, Netherlands

- 3Donders Centre for Cognitive Neuroimaging, Donders Institute for Brain, Cognition and Behaviour, Radboud University Nijmegen, Nijmegen, Netherlands

Oscillatory neuronal activity may provide a mechanism for dynamic network coordination. Rhythmic neuronal interactions can be quantified using multiple metrics, each with their own advantages and disadvantages. This tutorial will review and summarize current analysis methods used in the field of invasive and non-invasive electrophysiology to study the dynamic connections between neuronal populations. First, we review metrics for functional connectivity, including coherence, phase synchronization, phase-slope index, and Granger causality, with the specific aim to provide an intuition for how these metrics work, as well as their quantitative definition. Next, we highlight a number of interpretational caveats and common pitfalls that can arise when performing functional connectivity analysis, including the common reference problem, the signal to noise ratio problem, the volume conduction problem, the common input problem, and the sample size bias problem. These pitfalls will be illustrated by presenting a set of MATLAB-scripts, which can be executed by the reader to simulate each of these potential problems. We discuss how these issues can be addressed using current methods.

Introduction

Different cognitive or perceptual tasks require a coordinated flow of information within networks of functionally specialized brain areas. It has been argued that neuronal oscillations provide a mechanism underlying dynamic coordination in the brain (Singer, 1999; Varela et al., 2001; Fries, 2005, 2015; Siegel et al., 2012). These oscillations likely reflect synchronized rhythmic excitability fluctuations of local neuronal ensembles (Buzsáki and Wang, 2012), and may facilitate the flow of neural information between nodes in the network when the oscillations are synchronized between those nodes (Womelsdorf et al., 2007). The neural information transmitted from one region to another is reflected by the action potentials, where the action potentials themselves may be temporally organized in bursts. These bursts may occur during oscillations and may further enhance the reliability of the information transmission (Lisman, 1997) or contribute to the establishment of long-range synchronization (Wang, 2010). The brain could dynamically coordinate the flow of information by changing the strength, pattern, or the frequency with which different brain areas engage in oscillatory synchrony.

The hypothesis that neuronal oscillations in general, and inter-areal synchronization of these oscillations in particular, are instrumental for normal brain function has resulted in widespread application of quantitative methods to evaluate neuronal synchrony in electrophysiological data. These data can be obtained with invasive or non-invasive recording techniques, and in a context that involves an experimental manipulation or in a context that is task-free. Irrespective of the recording technique and context, once the data have been collected the experimental researcher is faced with the challenge to quantify neuronal interactions as well as to provide a valid interpretation of the findings. We feel that this is challenging for several reasons. First, the methods literature provides a multitude of metrics to quantify oscillatory interactions (for example, a recent review characterized 42 distinct methods, Wang et al., 2014), often described with a large amount of technical detail. Some methods, such as coherence and Granger causality, are based on rigorous statistical theory of stochastic processes, while others, such as Phase-Locking Value (PLV) are modifications to these methods that may be somewhat ad hoc, but nevertheless useful. Each of these metrics has their own advantages and disadvantages, and their own vigorous adherents and opponents. It is often difficult to choose and justify which method to use, even for the technically initiated. Second, since the algorithmic implementation of a particular interaction metric can be quite complicated, some research groups may have an idiosyncratic implementation of their championed interaction measures, with limited accessibility to the wider research community. This complicates applicability of these particular metrics and the comparison to other metrics. Third, the interpretation of the findings is typically not straightforward and results are therefore prone to being over-interpreted.

The purpose of this paper is to provide a (non-exhaustive) review and tutorial for the most widely used metrics to quantify oscillatory interactions, and to provide the reader with an intuitive understanding of these metrics. First, we provide a general taxonomy of metrics to estimate functional connectivity. Next, we provide a more formal definition of the most commonly used metrics, where possible illustrating the principles behind these metrics using intuitive examples. In the last part, we discuss various analysis pitfalls that one can encounter in applying these methods to real data. Using simulations, we generate synthetic time series to demonstrate how a multitude of practical issues can arise to generate spurious functional connectivity, which should not be interpreted in terms of underlying neuronal interactions. The different problems we discuss are signal-to-noise ratio (SNR) differences, limits in sample size, volume conduction/electromagnetic field spread, the choice of reference, and the problem of unobserved inputs. The generation of the synthetic time series as well as their analysis has been performed in MATLAB, using FieldTrip (Oostenveld et al., 2011), which will enable the comparison of how different data features affect the metrics of oscillatory interactions, and how different metrics perform on the same data. For each simulation of common pitfalls, where possible we present practical steps that can be used to mitigate the concerns. These simulations are put forward as toy examples of some of the problems that can occur, and are not intended to reproduce the full complexity of real data. Yet, we hope that they will serve as a useful guide to common interpretation issues and the current state of the art in addressing them. Finally, we also discuss where future methods might be useful in order to deal with limitations of current methods.

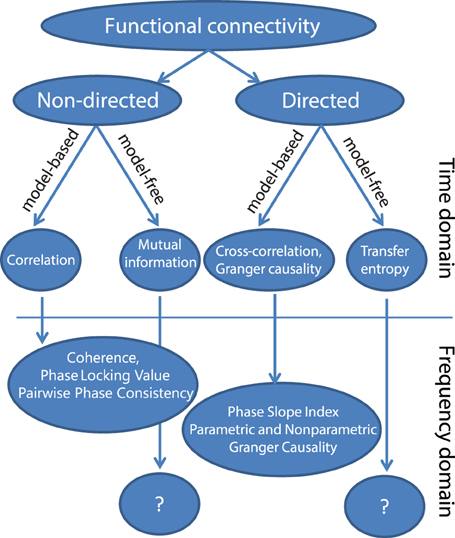

Taxonomy of Functional Connectivity Metrics

In this section, we will present a possible taxonomy of commonly used metrics for functional connectivity (Figure 1) and briefly describe the main motivations for each of those methods. A first subdivision that can be made is based on whether the metric quantifies the direction of the interaction. Non-directed functional connectivity metrics seek to capture some form of interdependence between signals, without reference to the direction of influence. In contrast, directed measures seek to establish a statistical causation from the data that is based on the maxim that causes precede their effects, and in the case of Granger causality and transfer entropy, that causes in some way predict their effects. These definitions of statistical causality were originally developed by Wiener (1956) and later practically implemented using auto-regressive models by Granger (1969). In neuroscience, a rich and growing literature has evolved that used these particular methods to quantify neuronal interactions, which has been reviewed elsewhere (Bressler and Seth, 2011; Sakkalis, 2011; Seth et al., 2015).

Within both directed and non-directed types of estimates, a distinction can be made between model-free and model-based approaches. The model-based approaches depicted in Figure 1 all make an assumption of linearity with respect to the kinds of interactions that may take place between two signals. The simplest measure for non-directed model-based interactions is the Pearson correlation coefficient, which measures the linear relationship between two random variables. In the general linear modeling framework the squared correlation coefficient (R2) represents the fraction of the variance of one of the variables (or signals) that can be explained by the other, and vice versa. A more generalized approach that does not assume a linear relationship is mutual information (Kraskov et al., 2004), which measures the generalized (linear and non-linear) interdependence between two or more variables (or time series) using concepts from information theory.

The Pearson correlation coefficient (and mutual information) as such are non-directed measures of interaction. Also, these measures ignore the temporal structure in the data, and treat the time series as realizations of random variables. In other words, this latter property means that the estimated connectivity will be the same irrespective of whether the time series have been randomly shuffled or not. Yet, when we shift the two time series with respect to one another before the correlation is computed (and do this shift at multiple lags), we will obtain the cross-correlation function, and evaluation of the cross-correlation as a function of time lag does account for temporal structure in the data. In particular, in some well-behaved cases it may sometimes be used to infer directed neuronal interactions. Specifically, the cross-correlation function has been effective to study neuronal systems containing dominant unidirectional interactions that exert their largest influence at a specific time delay (e.g., the retino-geniculate or geniculocortical feedforward pathways, Alonso et al., 1996; Usrey et al., 1998). In these cases, the time lag of maximal correlation and the magnitude of correlation can be informative about information flow between brain areas (e.g., Alonso et al., 1996). However, the interpretation of the cross-correlation function becomes complicated when it is estimated from neuronal signals with bidirectional interactions, which is the dominant interaction scenario in the majority of cortico-cortical connections. The cross-correlation functions of these interactions typically lack a clear peak, and have significant values at both positive and negative lags, indicating complex, bi-directional interactions that occur at multiple delays.

To address this limitation, other methods can be used, which assess the extent to which past values of one time series are able to predict future values of another time series, and vice versa. This notion is formally implemented in the metric of Granger causality. This metric can be computed using a linear auto-regressive model fit to the data or through non-parametric spectral matrix factorization (described in more detail later), and allows for an estimation of directed interactions. In particular, it allows for a separate estimate of interaction from signal x to signal y, and from signal y to signal x.

Finally, model-free approaches have also been developed to detect directed interactions. For instance, transfer entropy is a generalized, information-theoretic approach to study delayed (directed) interactions between time series (Schreiber, 2000; Lindner et al., 2011). Transfer entropy is a more generic implementation of the maxim that causes must precede and predict their effects, and is able to detect non-linear forms of interaction, which may remain invisible to linear approaches like Granger causality. In addition, transfer entropy has also been extended to specifically measure directed interactions between ongoing phase estimates of separate signals, which is useful to study non-linear oscillatory synchronization (Lobier et al., 2014). However, due in part to its generality, it is more difficult to interpret this measure. While the model-free approaches may be useful in quantifying non-linear neuronal interactions, this review will focus on the model-based, linear methods. Linear methods are sufficient to capture a wide-array of oscillatory interactions which are expected to take place under the hypothesis that oscillatory phase coupling governs neuronal interactions. For example, if we are interested in determining whether neuronal oscillations at similar frequencies in brain areas A and B engage in oscillatory coupling with a preferred phase difference, linear measures such as coherence or PLV will capture this interaction. If on the other hand we are interested in non-linear forms of coupling, such as cross-frequency coupling (where the phase or amplitude of frequency f1 interacts with the phase or amplitude of frequency f2, where f1 ≠ f2), then other metrics would be necessary. Therefore, the choice of method or data analysis should always be guided by the underlying hypothesis that is being tested.

A particularly important distinction if we wish to study oscillations is the distinction between metrics that are computed from the time or frequency domain representation of the signals. In order to identify individual rhythmic components that compose the measured data, and specifically to study rhythmic neuronal interactions, it is often convenient to represent the signals in the frequency domain. The transformation to the frequency domain can be achieved by the application of non-parametric (Fourier decomposition, wavelet analysis, or Hilbert transformation after bandpass filtering) or parametric techniques (autoregressive models). Subsequently, frequency-domain functional connectivity metrics can be estimated to evaluate the neuronal interactions. As we will see, many of these metrics in some way or another quantify the consistency across observations of the phase difference between the oscillatory components in the signals. A non-random distribution of phase differences could be indicative of functionally meaningful synchronization between neural populations.

We also note that there are a diverse group of methods that operate purely on the amplitude (envelope) of the oscillations to quantify amplitude and power correlations independent of phase relations. These methods have been fruitfully used to quantify large-scale brain networks by several groups (Hipp et al., 2012; Vidal et al., 2012; Foster et al., 2015). Indeed, there is now a growing debate about whether phase-relations or amplitude relations govern large-scale brain networks, with evidence existing for both perspectives (see Foster et al. this issue). In the following section we will focus on frequency-domain metrics that require an estimate of the phase of the oscillations, as opposed to metrics that quantify amplitude relations. Again, the choice of method depends on the underlying hypothesis that is being tested. Phase-based methods are appropriate for testing hypotheses where phase and moment-by-moment changes in synchronization are considered to be mechanisms for neuronal communication (e.g., Fries, 2005; Bastos et al., 2014). We will also not review methods that quantify phase-amplitude coupling as they are beyond the scope of this review and have been discussed extensively elsewhere (Canolty and Knight, 2010; Aru et al., 2015).

Measures of Synchronization

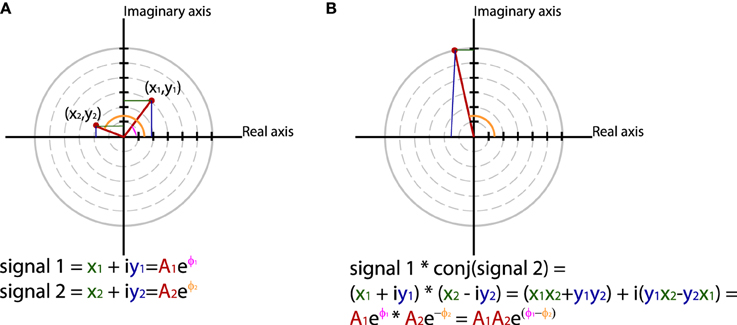

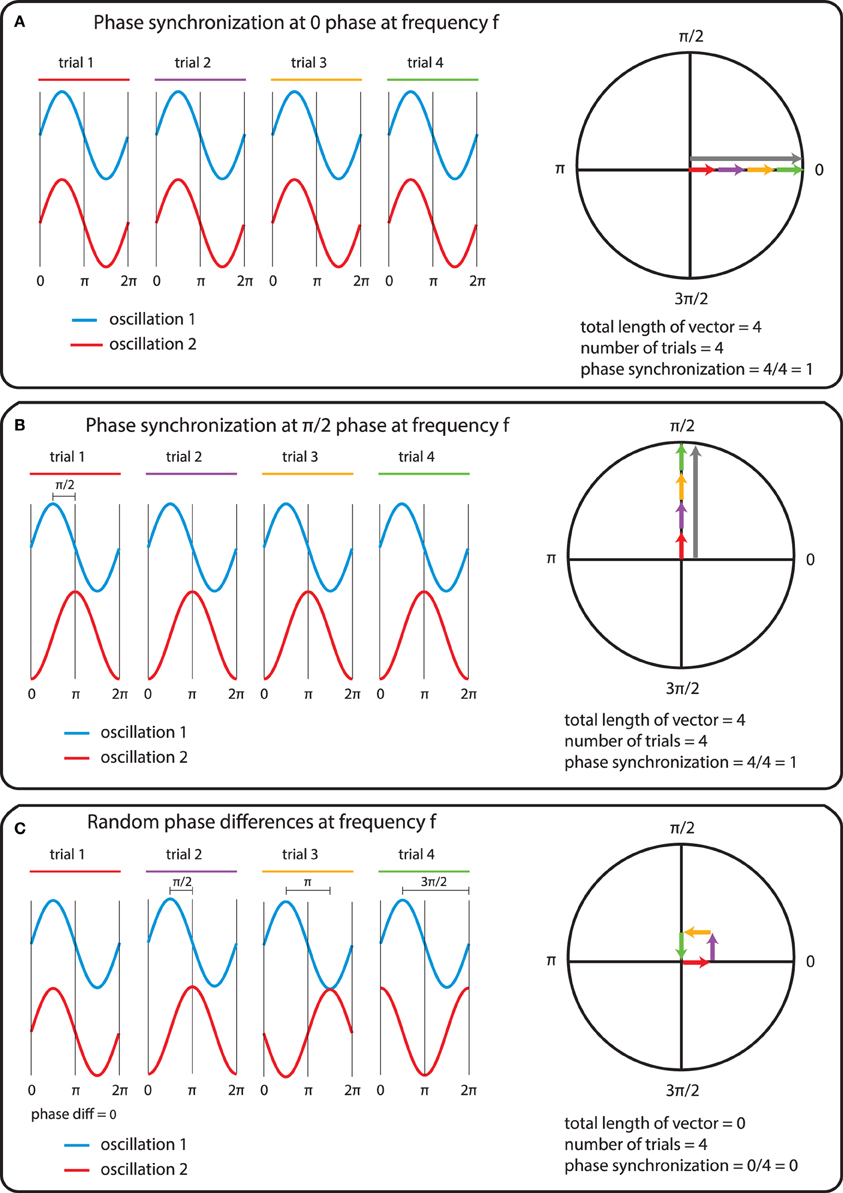

In general measures of phase synchrony are computed from the frequency domain representation of a pair of signals, which represents across a set of observations (epochs or time windows), and for a set of frequency bins, an estimate of the amplitude and the phase of the oscillations. Mathematically, it is convenient to represent these amplitudes and phases combined into complex-valued numbers, Aeiφ, or equivalently x + iy, which can be geometrically depicted as points in a 2-dimensional Cartesian coordinate system, where the magnitude of the vector connecting the point with the origin reflects the amplitude, and the angle of the vector with the X-axis reflects the phase (see Figure 2A; equivalently the x and y coordinates of this number represent the Real and Imaginary parts, respectively). The spectral representation of individual signals is combined to obtain the cross-spectral density (the frequency domain equivalent of the cross-covariance function), by means of frequency-wise multiplication of the spectral representation of one of the signals with the complex conjugate of the spectral representation of the other signal, where complex conjugation is defined as taking the negative of the phase angle. This multiplication results in a complex number, which geometrically depicts a vector in 2-dimensional space, where the magnitude of the vector reflects the product of the two signals' amplitudes, and the angle between the vector and the X-axis reflects the two signals' difference in phase (see Figure 2B). Measures of phase synchrony now aim to capture some property of the probability distribution of the single observation cross-spectral densities, quantifying the consistency of the distribution of phase differences. One way to combine the cross-spectral densities would be to take a weighted sum, which geometrically amounts to drawing all vectors head to tail, and normalize the end result. The idea is now that if there is some consistency across observations of the phase difference between the two oscillatory signals, the length of the weighted sum will have a non-zero value (because the vectors efficiently add up), whereas it will be close to zero when the individual observations' phase differences are evenly distributed between 0 and 360°. Figure 3 displays three “toy scenarios” to illustrate this concept. Imagine two oscillators that have a consistent zero-degree phase relation over many trials or observation epochs. This is depicted graphically in the time domain in the left panels of Figure 3, showing two signals (oscillation 1 and oscillation 2) that are observed for four trials. The right panels of Figure 3 show the vector sums of the cross-spectral densities. For the time being we assumed the amplitude of the oscillations to have a value of 1. In the first scenario (Figure 3A) the phase difference is the same (and 0) for each of the observations, yielding a vector sum that has a length of 4. In the second scenario, the phase difference is also consistent across observations (i.e., 90° each time). In the third scenario however, the phase difference is not consistent across observations. In this example, the individual observations' phase differences were 0, 90, 180, and 270° respectively, resulting in individual observation cross-spectral density vectors pointing right, up, left and down. This results in a vector sum that has zero length, which coincides with the fact that there was no consistent phase difference in this case. Note that real data will fall between the two extremes of perfect phase synchronization (vector sum normalized by number of epochs equals 1) and a zero phase synchronization (vector sum to zero), even in the absence of any true phase synchronization due to sample size bias (see the section on sample size bias for an in depth discussion of this issue and how it can be mitigated).

Figure 2. Using polar coordinates and complex numbers to represent signals in the frequency domain. (A) The phase and amplitude of two signals. (B) The cross-spectrum between signal 1 and 2, which corresponds to multiplying the amplitudes of the two signals and subtracting their phases.

Figure 3. The mechanics of the computation of phase synchrony. (A) An instance of perfect phase alignment at 0 radians. (B) An instance of perfect synchronization at a difference of π/2 radians. (C) Absence of phase synchronization due to inconsistent phase differences.

The Coherence Coefficient

One widely used metric quantifying phase synchrony between a pair of measured signals is the coherence coefficient. Mathematically, the coherence is the frequency domain equivalent to the time domain cross-correlation function. Its squared value quantifies, as a function of frequency, the amount of variance in one of the signals that can be explained by the other signal, or vice-versa, in analogy to the squared correlation coefficient in the time domain. The coherence coefficient is a normalized quantity bounded by 0 and 1, and is computed mathematically as:

The numerator term represents the length of the vector average of the individual trial cross-spectral densities between signal x and y at frequency ω. The denominator represents the square root of the product of the average of the individual trial power estimates of signals x and y at frequency ω.

It is usually more convenient to represent the averaged cross-spectral density in a single matrix, omitting the complex exponentials in the notation:

The diagonal elements reflect the power estimates of signals x and y, and the off-diagonal elements reflect the averaged cross-spectral density terms. The coherence can then be concisely defined as:

Coherency and the Slope of the Phase Difference Spectrum

When the magnitude operator (|…|) is omitted from the numerator in equation above, we obtain a complex-valued quantity called the coherency, where the phase difference angle may be interpretable (if there is a clear clustering of phase difference angles across trials) in terms of temporal delays. This is based on the notion that a consistent phase lag (or lead) across a range of frequency bins translates to a time lag (or lead) between the two time series. Note that the phase difference estimate in a single frequency bin may be ambiguous in its interpretation, because a, say, −150° phase delay cannot be disentangled from a +210° phase delay. This is due to the fact that the phase difference is circular modulo 360°. However, observing the phase difference over a range of frequencies may give an unambiguous interpretation of temporal delays. A disambiguated estimate of the time delay can be obtained when the rhythmic interaction can be described as a predominantly unidirectional time-lagged linear interaction. This estimate is then based on an estimate of the slope of the phase difference as function of the frequency range in which there is substantial coherence. This is because a fixed time delay between two time series leads to a phase difference that is a linear function of frequency. As an example, consider a time lag of 10 ms and a rhythmic process with substantial oscillatory power in the frequency range between 8 and 12 Hz. At 8 Hz, i.e., at a cycle duration of 125 ms, a time shift of 10 ms amounts 10/125 (0.08) of an oscillatory cycle (which amounts to 28.8°). At 10 Hz, the same time shift amounts to 10/100 of an oscillatory cycle (36°), and at 12 Hz, a time shift of 10 ms amounts to 10/83.33 (0.12) of an oscillatory cycle (43.2°). We would like to emphasize, however, that an interpretation of the estimated slope of the phase difference spectrum in terms of a temporal delay (and thus as an indicator of directionality) is only valid under rather ideal circumstances, where the interaction is predominantly unidirectional and well-captured under the assumption of linearity. Under non-ideal circumstances, the phase difference spectrum is a complicated function of frequency, and using it alone to assign directionality is un-principled (Witham et al., 2011; Friston et al., 2012).

Phase Slope Index

Compared to the slope of the phase difference spectrum, which assumes time-lagged and linear interactions, the phase slope index (PSI) is a more generic quantity to infer dominant unidirectional interactions (Nolte et al., 2008). It is computed from the complex-valued coherency, and quantifies the consistency of the direction of the change in the phase difference across frequencies. Given a pre-specified bandwidth parameter, it computes for each frequency bin the change in the phase difference between neighboring frequency bins, weighted with the coherence. As a consequence, if for a given frequency band surrounding a frequency bin the phase difference changes consistently across frequencies, and there is substantial coherence, the PSI will deviate from 0. The sign of the PSI informs about which signal is temporally leading the other one. As discussed in the previous section, under situations where interactions are bi-directional, the phase difference spectrum (and consequently PSI) may fail at correctly describing the directionality. For further discussion of this, the reader is referred to Witham et al. (2011) and Vinck et al. (2015).

Imaginary Part of Coherency

When the complex-valued coherency is projected onto the imaginary axis (y-axis) we obtain the imaginary part of the coherency (Nolte et al., 2004). This measure has gained some momentum over the past years, in particular in EEG/MEG connectivity studies (García Domínguez et al., 2013; Hohlefeld et al., 2013). Discarding contributions to the connectivity estimate along the real axis explicitly removes instantaneous interactions that are potentially spurious due to field spread, as discussed in depth in a later section.

Phase Locking Value

When applying the formula for the computation of coherence (Equation 1) to amplitude normalized Fourier transformed signals, we get the phase locking value (PLV) (Lachaux et al., 1999):

As a result of these individual observation normalizations, the PLV is computed as the length of the vector-average of a set of unit-length phase difference estimates. In motivating the use of PLV, as opposed to coherence, it is often claimed that the former reflects more strictly phase synchronization than coherence, because the latter confounds the consistency of phase difference with amplitude correlation. From a mathematical point of view this may be true, but on the other hand one could argue that it is more “difficult” to obtain a meaningful non-zero coherence value in the absence of consistent phase differences, compared to when there are no amplitude correlations. Intuitively, when all individual observation cross-spectral density estimates are pointing in random directions (no phase synchrony), even in the presence of perfect amplitude correlations, expected value of their vector average will still be comparatively small. On the other hand, if all individual cross-spectral density estimates are pointing more or less into the same direction (strong phase synchrony), even in the absence of amplitude correlations, the expected value of their vector average will still be appreciable. Also, one could argue, in the case of coherence, that assigning a stronger weight to observations with a large amplitude product, one is favoring those observations that have a higher quality phase difference estimate. This realistically assumes that a higher amplitude reflects a higher SNR of the sources of interest and thus, a better quality phase estimate.

Other Measures to Quantify Consistent Phase Differences

In addition to the quantities described in the previous sections, over the past years various other metrics have been defined to quantify synchronized interactions between neuronal signals. The motivation for the development of these metrics is that most connectivity metrics suffer from interpretational difficulties. These difficulties, which are discussed and illustrated in more detail below, have prompted methods developers to define metrics that are less prone to suffer from these problems. We have already discussed the imaginary part of coherency and the phase slope index, and this section describes two additional measures that are increasingly popular, the phase lag index (PLI), and pairwise phase consistency (PPC).

The PLI is a metric that evaluates the distribution of phase differences across observations. It is computed by averaging the sign of the per observation estimated phase difference (Stam et al., 2007). It is motivated by the fact that non-zero phase differences cannot be caused by field spread (just like with the imaginary part of coherency and the PSI). More recently, some adjustments to the PLI have been proposed, yielding the weighted PLI and debiased weighted PLI to make the metric more robust against field spread, noise and sample-size bias (Vinck et al., 2011).

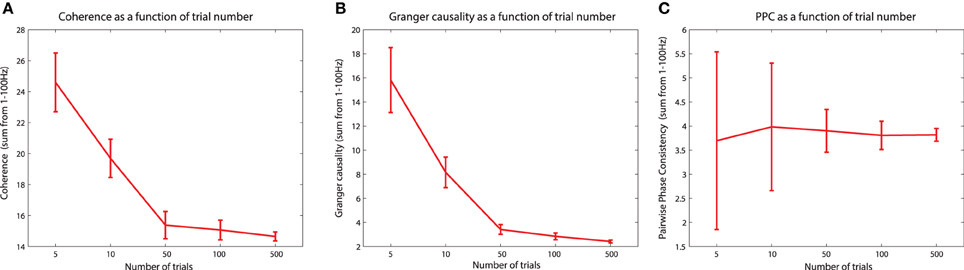

The PPC is a measure that quantifies the distribution of phase differences across observations (Vinck et al., 2010). Unlike the PLV, which is computed directly as a vector average of the relative phase across observations, the PPC is computed from the distribution of all pairwise differences (between pairs of observations) of the relative phases. The idea behind this is that, similar to the situation when investigating the distribution of relative phases directly, the distribution of pairwise differences in the relative phases will be more strongly clustered around an average value in the presence of phase synchronization. When no phase synchronization is present, the individual relative phase vectors are distributed around the unit circle, as are all pairwise differences of these relative phase vectors. The advantage of PPC beyond PLV is that this metric is not biased by the sample size that is used for the estimation. This means that the expected value of PPC does not change as a function of trial number (see section titled “The sample size bias problem” for more details).

In essence, many of the measures mentioned as such are not based on a principled mathematical approach (as opposed to for instance coherence and Granger causality which are rooted in the theory of stochastic processes). For instance PLI, the imaginary part of the coherency, and the phase slope index are pragmatic measures primarily put forward to address the interpretational problem of field spread, and by design intend to capture similar features of the interaction between time series. It is often an empirical question as to which of the measures is most suited to be used, and ideally one should expect that the conclusions drawn do not strongly depend on the measure that was chosen. Therefore, in general it is advisable to interrogate the data using several of these measures, in order to get a feel for how the estimates relate to one another.

Going Beyond Pairwise Interactions

So far we have discussed connectivity metrics that are defined between pairs of signals. Often, however, more than two signals have been recorded, and it may be relevant to investigate in more detail the pattern of multiple pairwise interactions. For this, graph theoretic approaches can be employed (Sporns, 2011). These approaches build on a valid quantification of the connectivity, are discussed extensively elsewhere and are beyond the scope of this tutorial. It is brought up here as a prelude to the next section, where we discuss the problem of common input. In general, the problem at stake is the correct inference of a direct interaction in the presence of other (possibly unobserved) sources. Although latent, unobserved sources pose a fundamental and irresolvable problem, information from sources that have been observed can be used to remove indirect influences on the estimation of connectivity. One of the ways in which this can be done in the context of coherence analysis, is by means of using the partial coherence (Rosenberg et al., 1998). If multiple signals have been recorded, one can compute for any signal pair the so-called partialized cross-spectrum, which is obtained by removing the linear contribution from all the other signals. From the partialized cross-spectrum, the partial coherence can be easily obtained. The partialized cross-spectrum can be obtained as follows. Starting from the full cross-spectral density matrix,

we can partialize for the linear contributions from signals z1-zn to the cross spectrum between signal x and y as follows:

Spike-Field Coherence

With invasive electrophysiological signals, it is possible to record action potentials from single neurons and/or clusters of neurons (sometimes referred to as multiunit activity). In this situation, it can be informative to examine how ongoing oscillations in the simultaneously recorded LFP or EEG are related to the spikes. To this end, spikes from a given unit can be represented as a time series of ones and zeros, at the same sampling rate as the continuous LFP/EEG signals (note that it is possible to represent the spikes as a vector of spike times—this can sometimes lead to a sparser representation of the data). Once a suitable representation of the spikes is chosen, we can compute any of the above-mentioned metrics of synchronization between spikes and fields, and indeed also between different spike trains. This can often reveal significant modulation of spike timing relative to field oscillations (e.g., significant spike-field coherence), which can also be sensitive to task variables (e.g., Fries et al., 2001; Gregoriou et al., 2009). Spike-field analysis comes with its own host of caveats and challenges, which are beyond the scope of this review (interested readers can refer to Vinck et al., 2012; Ray, 2015).

Quantification of Frequency Resolved, Directed Interactions with Granger Causality

So far, we have reviewed connectivity metrics that at best infer directionality of interactions based on the sign of the phase difference of the band-limited oscillatory signal components. Yet, the practical applicability of these techniques is limited to cases where there is a consistently time-lagged and predominantly uni-directional interaction. Granger causality and related metrics are capable of quantifying bi-directional interactions and provides two estimates of directed connectivity for a given signal pair, quantifying separately the directed influence of signal x on signal y, and the directed influence of signal y on signal x. Originally, the concept of Granger causality was applied to time series data in the field of economics (Granger, 1969), and extension of the concept to the frequency domain representation of time series was formulated by Geweke (1982). Although excellent introductory texts exist that explain the concept of frequency domain Granger causality and their application to neuroscience data (Ding et al., 2006), we briefly review the essential concepts in some detail in the Appendix (Supplementary Material). At this point, we restrict ourselves to the necessary essentials.

Time Domain Formulation

In essence, Granger causality represents the result of a model comparison. It is rooted in the autoregressive (AR) modeling framework, where future values of time series are modeled as a weighted combination of past values of time series. Specifically, the quality of an AR-model can be quantified by the variance of the model's residuals, and Granger causality is defined as the natural logarithm of a ratio of residual variances, obtained from two different AR-models. One of these models reflect a univariate AR-model, where values of time series x are predicted as a weighted combination of past values of time series x. The other model is a bivariate AR-model, where the values of time series x are predicted not only based on past values of x, but also based on past values of another time series y. A substantial reduction of the variance of the residuals comparing the univariate model to the bivariate model implies that the inclusion of information about the past values of signal y in the prediction of signal x (above and beyond inclusion of only past values of signal x) leads to a better model for time series x. In these cases, the variance ratio is larger than 1, which leads to a Granger causality value that is larger than 0, signal y is said to Granger cause signal x (Granger, 1969; Ding et al., 2006; Bressler and Seth, 2011). Applying the same logic but now building autoregressive models to predict signal y will yield an estimate of Granger causality from signal x to y.

Frequency Domain Formulation

The concept of Granger causality can also be operationalized in the frequency domain (Geweke, 1982). We refer the interested reader to the Appendix (Supplementary Material and the references mentioned therein) for more details. Here, it is sufficient to state that computation of Granger causality in the frequency domain requires the estimation of two quantities: the spectral transfer matrix (H(ω)), which is frequency dependent, and the covariance of the AR-model's residuals (Σ). The following fundamental identity holds: H(ω)ΣH(ω)* = S(ω), with S(ω) being the cross-spectral density matrix for signal pair x, y at frequency ω. In other words, the conjugate-symmetric cross-spectral density can be obtained by sandwiching the covariance matrix of the residuals between the spectral transfer matrix. From the cross-spectrum, the spectral transfer matrix and the residuals' covariance matrix, the frequency-dependent Granger causality can be computed as follows:

Relationship Between Frequency Domain Granger Causality and Coherence

Just as in the time domain formulation of Granger causality, it is possible to define a measure of total interdependence, based on the cross-spectral density estimates [more details are provided in the Appendix (Supplementary Material)]:

The fraction between the brackets is equivalent to the squared coherence coefficient, as shown in an earlier section. In other words, there is a one-to-one relationship between the coherence coefficient and the total interdependence. In analogy to the time domain formulation, the frequency specific total interdependence can be written as a sum of three quantities: GCx, y(ω) = GCx→y(ω) + GCy→x(ω) + GCx·y(ω), where the instantaneous causality term is defined as:

This latter term reflects the part of the total interdependence that cannot be accounted for by time-lagged (phase-shifted) interactions between signals x and y, reflecting instantaneous common input from latent sources. Note that in the case of zero-phase lag synchronization the instantaneous causality term is not necessarily larger relative to the causal terms. Specifically, in a system with bidirectional interactions, where the magnitude and phase delay of the transfer function are approximately equal in both directions (i.e., from x to y, and from y to x), the cross-spectral density matrix can report strong synchronization at zero-phase delay that is nonetheless brought about by strong time-delayed causal interactions in both directions. Note, however, that theoretical work points out that zero-lag phase coupling is much more likely to occur in the presence of a dynamic relay through a third source, which gives common input to stabilize phase relations across the network at zero-lag (Vicente et al., 2008). Practically, this situation can be distinguished from a purely bi-directional interaction only if the two nodes with zero-lag synchronization were observed simultaneously with the third node that may (or may not) provide common inputs. More details on this scenario and how to detect it in physiological data are provided in the section “The common input problem.”

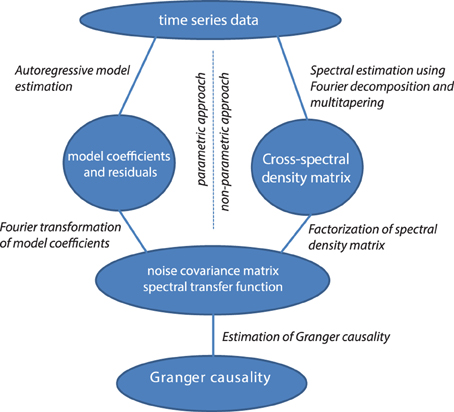

Non-Parametric vs. Parametric Computation of Granger Causality

Granger causality in the frequency domain can be calculated with parametric methods (with auto-regressive models, as discussed, left half of Figure 4) or with non-parametric methods (with Fourier or wavelet-based methods). These approaches differ in how the covariance of the residuals and the transfer matrices are computed (see the right half of Figure 4). The non-parametric approach is based on the fact that the cross-spectral density matrix for a given frequency is equal to the model's residuals covariance matrix sandwiched between the transfer matrix for that frequency, as outlined above:

Starting from the cross-spectral density matrix (and thus going into the opposite direction) it is possible to factorize the cross-spectral density matrix into a “noise” covariance matrix and spectral transfer matrix by applying spectral matrix factorization (Wilson, 1972)—which provides the necessary ingredients for calculating Granger causality (see Equation 4, Dhamala et al., 2008). It has been shown that parametric and non-parametric estimation of Granger causality yield very comparable results, particularly in well-behaved simulated data (Dhamala et al., 2008).

Figure 4. Data processing pipeline for the computation of Granger causality, using the parametric or non-parametric approach.

The main advantage in calculating Granger causality using this non-parametric technique is that it does not require the determination of the model order for the autoregressive model. The particular choice of the appropriate model order can be problematic, because it can vary depending on subject, experimental task, quality and complexity of the data, and model estimation technique that is used (Kaminski and Liang, 2005; Barnett and Seth, 2011). In contrast, the non-parametric estimation of Granger causality utilizes data points from the entire frequency axis, the number of which is essentially determined by the number of samples in the data window that is used for the analysis.

In comparison to the parametric estimation technique, the non-parametric spectral factorization approach requires more data and a smooth shape of the cross-spectral density (i.e., no sharp peaks as a function of frequency) to converge to a stable result. This can be seen most acutely when attempting to compute Granger causality from single trials. Although both parametric and non-parametric techniques can be used for single-trial estimates of directed connectivity, it appears that parametric estimates are more sensitive than non-parametric estimates, especially when the model order is known (Brovelli, 2012; Richter et al., 2015).

Bivariate vs. Multivariate Spectral Decomposition

It is relevant to note that, in multichannel recordings, the spectral transfer matrix can be obtained in two ways, irrespective of whether it is computed from a fitted autoregressive model, or through factorization of a non-parametric spectral density estimate. One can either fit a full multivariate model (or equivalently, do a multivariate spectral decomposition), where all channels are taken into account, or one can do the analysis for each channel pair separately. The latter approach typically yields more stable results (e.g., because it involves the fitting of fewer parameters), but the advantage of the former approach is that information from all channels is taken into account when estimating the interaction terms between any pair of sources. In this way, one could try and distinguish direct from indirect interactions, using an extended formulation of Granger causality, called partial Granger causality (Guo et al., 2008), or conditional Granger causality (Ding et al., 2006; Wen et al., 2013). Also, the multivariate approach yields a spectral transfer matrix that can be used to compute a set of connectivity metrics, which are related to Granger causality. These metrics are the directed transfer function (DTF) with its related metrics (Kamiñski and Blinowska, 1991), and partial directed coherence (PDC) with its related metrics (Baccalá and Sameshima, 2001). These quantities are normalized between 0 and 1, where the normalization factor is either defined as the sum along the rows of the spectral transfer matrix (for DTF), or as the sum along the columns of the inverse of the spectral transfer matrix (for PDC). By consequence of these normalizations, DTF from signal y to x reflects causal inflow from y to x as a ratio of the total inflow into signal x, while in its original formulation PDC from signal y to signal x reflects the causal outflow from y to x as a ratio of the total outflow from signal y. These measures, and their derivatives, along with motivations for preferring one over the other metric, are discussed in more detail in for example (Blinowska, 2011).

Limitations and Common Problems of Functional Connectivity Methods

The following section outlines some issues that warrant caution with respect to the interpretation of the estimated connectivity. The core issue at stake is whether the estimate of the connectivity (or the estimate of the difference in connectivity between experimental conditions) reflects a genuine effect in terms of (a change in) neuronal interactions. As we will illustrate by means of simple simulations, there are various situations that cause non-zero estimates of connectivity in the absence of true neuronal interactions. The main cause of these spurious estimates of connectivity is the fact that in MEG/EEG/LFP recordings the signals that are used for the connectivity estimate always to some extent reflect a (sometimes poorly) known mixture of a signal-of-interest (which is the activity of a neuronal population that we are interested in) and signals-of-no-interest (which we will call noise in the remainder of this paper). Another cause of spurious estimates is more related to interpretation of the observed connectivity patterns. This relates to the fact that it is impossible to state whether an observed connection is a direct connection, or whether this connection is mediated through an unobserved connection.

In what follows, we describe five common practical issues that warrant caution with respect to the interpretation of connectivity estimates. We illustrate these problems using simple simulations that are based on MATLAB-code and the FieldTrip toolbox, allowing the interested reader to get some hands-on insight into these important issues. The code has been tested on MATLAB 2013a/b and 2014a/b, using Fieldtrip version “fieldtrip-20150816.” The first three problems (the common reference problem, the volume conduction/field spread problem, and the SNR problem) are a consequence of the fact that the measured signals are always a mixture of signal-of-interest and noise, and the 4th is the problem of unobserved common input. The 5th problem is caused by unequal numbers of epochs or observation periods used to compute metrics of functional connectivity when making comparisons between conditions or across subjects.

The Common Reference Problem

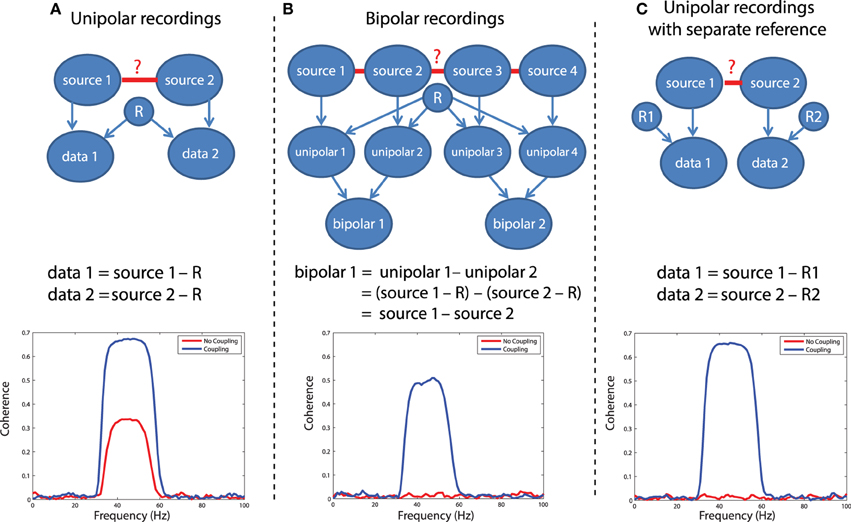

In LFP or EEG recordings, spurious functional connectivity estimates can result from the usage of a common reference channel. This problem is depicted graphically in Figure 5A. Imagine two recorded time series, data 1 and data 2. Each of these signals reflects the difference of the electric potential measured at the location of the electrode and at the location of the reference electrode. If the same reference electrode is used for both electrodes that are subsequently used for the connectivity estimation, the fluctuations in the electric potential at the reference location will be reflected in both time series, yielding spurious correlations at a zero time lag. Any connectivity metric that is sensitive to correlations at a zero time lag will in part be spurious. The extent to which the estimated connectivity is spurious depends on the relative strength of the potential fluctuations at the recording and reference sites. Obviously, the large majority of EEG and LFP recordings use a single reference electrode in the hardware. The following simulation illustrates the effect of a common reference electrode. We will simulate 30–60 Hz oscillatory activity in two neuronal sources, and this activity is measured by two electrodes that share a common reference electrode (which is also assumed to have an oscillatory component). We will estimate the coherence between the measured signals, in the presence and in the absence of coupling between the underlying sources.

Figure 5. Illustration of different referencing schemes and how each effects the calculation of coherence with and without true neuronal coupling. (A) The case of unipolar recordings, which introduce spurious coherence values in the absence of coherence. (B) The bipolar derivation technique, which largely resolves the common reference problem. (C) The separate reference scheme, which also is not sensitive to common reference problems.

In this simulation, we have assumed a generative model consisting of a linear mixture between various sources that each have an oscillatory component in the 30–60 Hz range. Independent sources are projected directly to the relevant data, denoted by arrows between e.g., source 1 and data 1 in Figure 5A. The common reference is treated as a shared signal that is projected with equal weight to each data channel (note the arrows between R and each of the data channels in Figure 5). Coupling between sources is simulated as a shared signal that is projected into each source in some mixture, specified in the script (sim_commonreference.pdf). Using this generative model, it is evident that when no real coupling is present between source 1 and source 2, there is significant artifactual coherence between data 1 and data 2, due to the common reference (Figure 5A, red trace). This is a problem, because in the absence of any underlying neuronal interaction, any connectivity estimate should be close to zero. When real coupling is introduced between source 1 and source 2, as one can see in Figure 5A, the coherence between data 1 and data 2 increases—this reflects the presence of both the common reference and real coupling. This simulation was performed assuming strong coupling between the sources. In the presence of weak coupling, the artifactual coherence may sometimes predominate—and obscure—the real coherence caused by true interactions.

One solution to the problem is to record additional channels and perform bipolar derivation to remove the common reference, and then calculate connectivity between locally-rereferenced bipolar derivations that do not share a common unipolar channel (Figure 5B). This procedure has for instance been applied in Bollimunta et al. (2009) and in Bosman et al. (2012), and relies on two assumptions: first, that the reference is equally present in the unipolar channels that will be subtracted, and second, that each of the unipolar channels reflects a different mixture of the underlying neuronal sources—otherwise, the same logic behind eliminating the common reference through bipolar derivation would also result in a cancelation of the neuronal signal. For a more rigorous discussion of the bipolar derivation procedure and its applications to neural data, see Trongnetrpunya et al. this issue. In Figure 5B each unipolar channel is depicted as reflecting the activity of an independent source, but each unipolar channel could also reflect a mixture of underlying sources, and the same logic would apply. In this scenario, the bipolar derivation removes the common reference (see the equation in Figure 5B), and the resulting bipolar signal is a subtraction of two local source activities. When coherence is now calculated directly between bipolar site 1 and bipolar site 2, it is not biased by the common reference, as shown in the lower panel of Figure 5B: when no coupling is present between the sources, coherence is close to zero (how close will depend on how many trials have gone into the analysis and the resulting bias—see section on the sample size bias), but in the presence of coupling, coherence reaches a value of 0.5.

A second possible solution to this problem is shown in Figure 5C, which is to separately reference each channel. In this case (lower panel of Figure 5C), there is again no artifactual coherence component. Therefore, while the separate referencing scheme does present a solution in principle, it may not be practical for large-scale, high density recordings. The simulations above can be realized by running the code in script sim_commonreference.pdf. Note that in these simulations, the reference was also assumed to have an oscillatory 30–60 Hz component—however, if the reference consisted of white noise, or a mixture of white and colored noise, and coherence were calculated between channels which both had a common reference, then the respective coherence spectrum would reflect whatever the underlying spectral shape of the reference was—due to its instantaneous mixture into both channels.

The Volume Conduction/Field Spread Problem

Another important issue in the quantification and interpretation of neuronal interactions, particularly when the estimates are based on non-invasive recordings, is caused by volume conduction. Strictly speaking, volume conduction refers to the currents flowing in the tissues surrounding active neuronal sources. Colloquially it has been adopted as a term to reflect more generally the phenomenon of the spatial spread of electromagnetic fields, which cause one recording channel or sensor to pick up the activity of multiple neuronal sources. In the case of magnetic field recordings it is sometimes more aptly referred to as field spread. At its worst, field spread can create purely artifactual coherence or phase-locking, meaning that the presence of functional connectivity between two signals would indicate not the presence of a true neuronal interaction, but instead the presence of activity from the same underlying source at the two channels. An important property of volume conduction and field spread, at least in the frequency range that is relevant for neuroscience, is that its effects are instantaneous. That is, if a dominant rhythmic neuronal source is visible at two sensors at once, the phase observed at these sensors is the same (or at a difference of 180°, when each of the sensors “sees” an opposite pole of the dipole). This property of instantaneity can be exploited to use connectivity measures that discard contributions of 0 (or 180) degrees phase differences. This will be explained in more detail below.

When considering the different electrophysiological recording techniques, field spread is considered the least problematic in invasive recordings. Spiking activity of individual neurons is by definition very focal spatially, and volume currents associated with this spiking can only be picked up at a distance of a few tens or hundreds of microns. Therefore, for the quantification of spike-spike or spike-field interactions, field (Kajikawa and Schroeder, 2011) spread is hardly an issue. In the interpretation of synchronization estimated between two LFP signals, volume conduction needs to be taken into account. The reason is that the local field potentials may reflect volume currents propagating over larger distances, exceeding 1 cm (Kajikawa and Schroeder, 2011). Field spread is by far the most problematic when performing non-invasive measurements, because of the large distance between the sensors and the neural sources, and because of the spatial blurring effect of the skull on the electric potential distribution on the scalp with EEG (Nunez et al., 1997, 1999; Srinivasan et al., 2007; Winter et al., 2007; Schoffelen and Gross, 2009). By consequence, a single underlying neuronal source will be seen at multiple EEG or MEG sensors—causing spurious correlation values between the sensors.

In the presence of field spread any given source is “visible” on multiple sensors/electrodes at once, and several strategies may be employed to alleviate the adverse effects of field spread. The first strategy attempts to “unmix” the measured signals to derive an estimate of the underlying sources. In the context of EEG/MEG recordings one should think of the application of source reconstruction algorithms (Schoffelen and Gross, 2009). In the context of LFP recordings one should think of the application of a re-referencing scheme, using for example a local bipolar derivation (as reviewed in the previous section), or estimating the current source density. The second strategy relates to the use of well-controlled experimental contrasts. The assumption here is that volume conduction affects the connectivity estimates in a similar way in both conditions, and subtraction will effectively get rid of the spurious estimates. A third strategy, as already mentioned above, would be to use connectivity metrics that capitalize on the out-of-phase interaction, discarding the interactions that are at a phase difference of 0 (or 180°). Examples of these metrics are the imaginary part of the coherency, the (weighted) phase lag index, or the phase slope index. At the expense of not being able to detect true interactions occurring at near zero-phase difference, these metrics at least provide an account of true (phase-lagged) interactions between signal components. Unfortunately, neither the application of source reconstruction techniques (which moreover add a level of complexity to the analysis of the data), nor the use of experimental contrasts or phase-lagged interaction measures will fully mitigate the effects of volume conduction. This has been described in more detail elsewhere (Schoffelen and Gross, 2009).

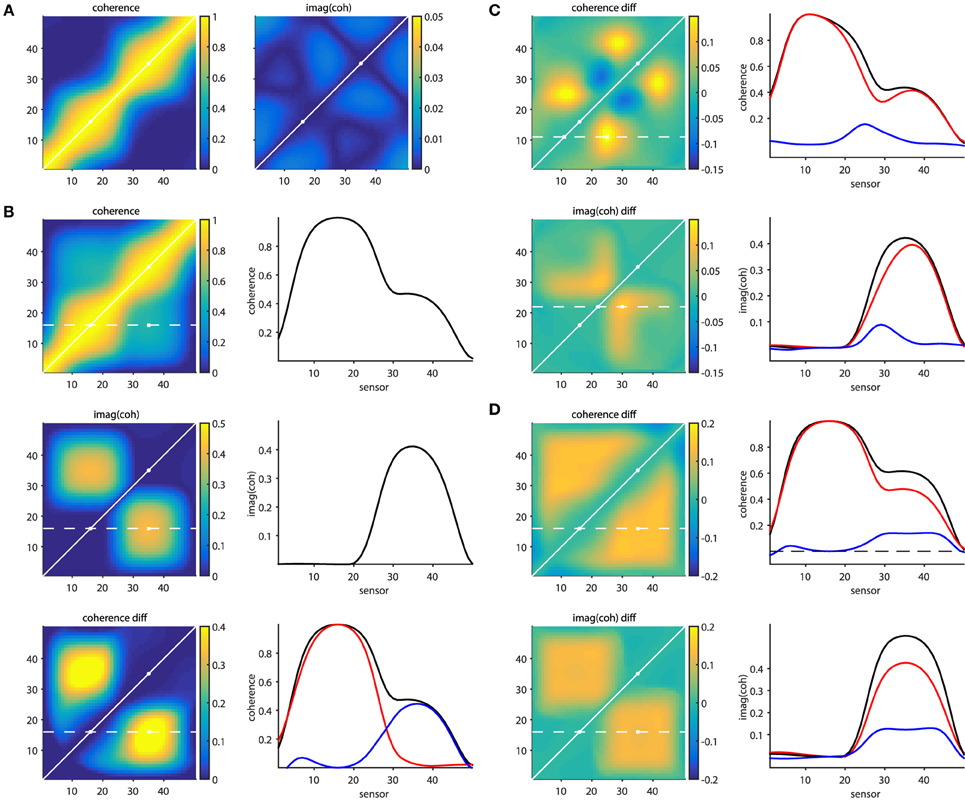

The script sim_volumeconduction.pdf illustrates some of the issues mentioned above, as well as the approaches that partially overcome them. The general approach in this set of simulations is that a set of signals is simulated at 50 “measurement locations” (MEG/EEG channels, or at “virtual electrodes”). Each of the signals consists of a mixture (due to field spread) of the activity of the underlying sources. The source activity time courses are generated by means of a generative autoregressive model. The source-to-signal mixing matrix was defined as a spatial convolution of the original source activations with a 31-point Hanning window. In other words we can consider the signals to consist of a weighted combination of the original source and the 15 most nearby sources on either side, with a spatial leakage that is dependent on the distance.

Figure 6A shows connectivity matrices for all sensor pairs, and the source signals were constructed such that none of the underlying sources were interacting (i.e., there were no cross-terms in the autoregressive model coefficients). The noise covariance matrix had only non-zero values on the diagonal, with large values for the 16th and the 35th source. The panel on the left, displaying coherence, illustrates the seed blur as a consequence of the field spread. Each row (column) in the matrix reflects the coherence with respect to a specific seed sensor (indexed by the row number). The points closer to the diagonal are the locations that suffer most from this leakage. It can be seen that the seed blur is considerable, even if the signal is low (outside the two “active” points indicated with the white circles). Also, in the presence of a signal (i.e., when seeding from the “active” locations), the seed blur is spatially more widespread. The panel on the right shows the imaginary part of coherency, and demonstrates that (1) this quantity is low in the absence of time-lagged interactions and (2) that the seed blur is abolished. Figure 6B shows a simulation where the 16th and 35th source were actually “connected” with a non-zero time lag. The panels on the left show the coherence matrix, the imaginary part of the coherency, and the difference between the top left subpanels in b and a. The panels on the right display the connectivity in one of the rows in these matrices (indicated with the dotted white line on the left), reflecting the connectivity seeded from the 16th source. The bottom right subpanel in Figure 6B shows the coherence from the top left subpanel in Figure 6B in black, the coherence from the top left subpanel in a in red, and their difference in blue. So far, these simulations demonstrate in a very simplified scenario the effects of field spread, as well as the remedial effects provided by subtracting two conditions with different levels of connectivity between the active sources, or by focusing on the time-lagged component of the connectivity.

Figure 6. Effects of field spread on the estimation of connectivity. (A) In absence of connectivity, field spread leads to spurious coherence (left panel), while the imaginary part of coherency mitigates this effect (right panel). (B) In the presence of time-lagged interactions, “seed blur” caused by field spread leads to only a “shoulder” in the coherence, and not to a distant peak (top right panel). The imaginary part of coherency correctly identifies a distant peak (middle right panel). Bottom panels: the distant coherence peak is revealed when taking the difference (blue line) between the connected (black line) and unconnected (red line) situations. (C) Spurious differential effects show up both in the coherence (top panels) and in the imaginary part of coherency (bottom panels) when the SNR changes from one condition to another. (D) Spurious differential effects show up both in the coherence (top panels) and in the imaginary part of coherency (bottom panels) when the amplitude of one of the active sources changes from one condition to another.

Real experimental data, however, is hardly ever as well-behaved as the simulated data presented above. The following provides some non-exhaustive examples of cases where the remedial strategies may yield results that can be wrongly interpreted. Figure 6C shows a situation where a true connection exists between the 16th and the 35th source, which doesn't change in strength from one condition to another. In one of the conditions an additional source is active (at location 27), which is not connected to any of the other sources. The difference maps, however, show quite some spatial structure, which is due to the differential effects exerted by the field spread on the connectivity estimates. For example, seeding from the 11th source using coherence as a connectivity metric yields a spurious non-zero difference around the 25th source. This is shown in the right panel of Figure 6C, where the individual conditions' seed-based connectivity estimates are displayed as red and black lines, and their difference is displayed in blue. Likewise, seeding from the 22nd source using the imaginary part of coherency yields a spurious non-zero difference around the 28th source. The implication of this is that differences in the activity in the contributing sources can yield spurious differences in estimated connectivity. One could argue that an appropriate seed selection would have prevented this erroneous interpretation. Had we focused on the active sources to begin with, the problem may have been less severe. Although there may be some truth in this, in practice it is often difficult to select the proper seeds a priori, for example because the relevant sources are not necessarily the ones that have the highest amplitude, and even if the seed locations are appropriately selected, spurious connectivity can still arise.

This is illustrated in Figure 6D, where two conditions are compared, with unchanging connectivity between the activated sources (16 and 35), but with a change in power for one of them. The panels on the right show the seeded connectivity (from source 16) for the condition with low (red lines) and high (black lines) power for source 35, as well as their difference (blue lines).

The difficulty of interpreting connectivity estimates due to field spread, as described above, can also be problematic for a correct inference of directed connectivity, e.g., by means of Granger causality, or related quantities. The reason for this is that the linear mixing of the source signals due to the field spread leads to signal to noise ratio differences between channels (discussed more in the next section), and inaccuracies in the estimation of the spectral transfer matrix and the noise covariance. Although some simulation work indicates that the true underlying network connectivity may be recovered by applying a renormalized version of PDC (Elsegai et al., 2015), Vinck et al. (2015) showed that instantaneous mixing is problematic for an accurate reconstruction of Granger causality. These authors propose to use a heuristic based on the investigation of the instantaneous interaction between signals in relation to their time-delayed interactions, in order to discard or accept the estimated directed connectivity to be trustworthy.

The Signal-to-Noise Ratio Problem

Another issue that leads to interpretational problems of estimated connectivity is what we call here the SNR problem. In some way this problem is related to the field spread problem discussed above, since its underlying cause is the fact that the measured signals contain a poorly known mixture of signal-of-interest, and “noise.” Some consequences of this have already been illustrated to some extent above, where the comparison between conditions leads to spurious differences in connectivity, due to differences in SNR across conditions. Apart from posing a problem for correctly inferring a difference in connectivity across conditions, SNR differences can also be problematic when inferring differences in directional interactions between sources. It should be noted that this problem can also arise in the absence of volume conduction (e.g., when computing connectivity from locally-rereferenced LFP recordings), and therefore we believe that this problem merits a separate discussion. The core issue here is that spurious directional connectivity estimates can be obtained from two signals that have each been observed with different amounts of signal and noise. Such a situation can for instance arise when across LFP recording sites there are differences in sensor noise (e.g., due to differences in amplifier characteristics), or differences in distance to the active sources of interest, causing a difference in signal strength. While this is a problematic issue for many metrics of directed connectivity, in this section we focus specifically on how estimates of Granger causality can be corrupted by differences in SNR between signals. We illustrate the issue by presenting some simulations, which can be realized by running the code in script sim_signaltonoise.pdf.

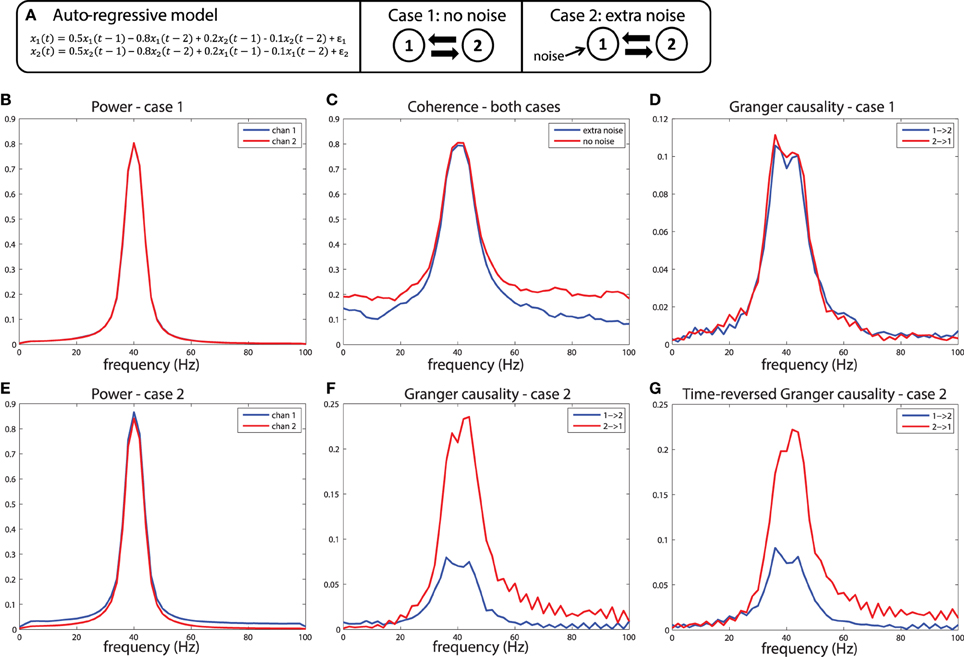

To understand why differences in SNR are likely to be problematic for the estimation of Granger causality, it is useful to remember that one variable has a Granger causal influence to another if the past of one time series can enhance predictions about another. In general, this approach will work to detect true connectivity, however, when applied to noisy data, this definition of causality based on prediction can lead to unexpected consequences. Imagine a situation shown in Figure 7A: variables x1and x2 are generated by an auto-regressive process that is a function of their own past at time lags 1 and 2 (the auto terms), and also a function of the past of the other variable (the cross terms), also at time lag 1 and 2. Crucially, the auto-regressive coefficients of both the auto-terms and the cross terms are identical, and the variance of the innovations (ε1, ε2) of both processes are also identical, meaning that the two variables influence each other with equal strength. Therefore, by construction, Granger causality from x1 to x2 should be identical compared to Granger causality from x2 to x1. This is indeed the case when the outputs of such a generative process are observed without measurement noise, illustrated in Figures 7B–D. In this case, as expected, both variables have nearly identical power spectra peaking at 40 Hz (Figure 7B), have a coherence spectrum that also peaks at 40 Hz (Figure 7C), and have approximately equal Granger causality at 40 Hz in both directions (Figure 7D). Note that the slight difference in Granger causality from x1 to x2 vs. x2 to x1 is due to estimation error, which approaches zero as the number of realizations of the model and subsequent observations are repeated.

Figure 7. A simulation of the signal to noise ratio problem. (A) Two nodes interact bidirectionally with equal connectivity strengths in the two directions, and the data is observed without (case 1) or with (case 2) measurement noise. (B) Power for case 1, (C) Coherence for case 1 and 2, and (D) Granger causality estimates for case 1. (E) Power, (F) Granger causality estimates for case 2. (G) Granger causality estimates after time-reversing the data produced by case 2.

Now let us consider case 2, where we observe the same system, but in the presence of noisy measurements. In this case, white noise is added to x1, but not to x2. The first result of this manipulation is that the power spectrum of x1 is shifted upward (Figure 7E), a result of adding power at all frequencies (i.e., white noise) to that channel. The coherence between the channels is also modulated by this manipulation, shown in Figure 7C, with a reduction in coherence at a broad range of frequencies when extra noise is present. When looking at the Granger causal estimates, shown in Figure 7F, we observe an asymmetric relationship, where x2 has a stronger Granger causal influence on x1 than x1 on x2. This is the consequence of the fact that the additional noise on x1 has weakened its predictive power of x2, causing an apparent asymmetry in the directionality. Note that this asymmetry is exactly in line with the definition of Granger causality based on a log ratio of prediction errors (comparing the univariate to the bivariate model), and in that sense it is not “wrong.” However, this does lead to a divergence between “Granger causality” and what we as experimentalists would like to infer as “true causality,” where we would like to infer a dominant direction of information flow from an asymmetry in Granger causal estimates.

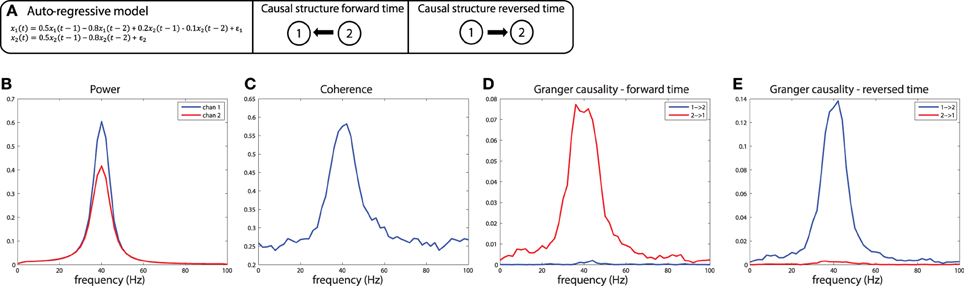

These simulations depict the “worst case scenario,” when a massive difference in SNR exists between channels (SNR of x1 was 1, SNR of x2 was maximal because there was no noise added). Asymmetries in Granger causality that are driven by SNR differences have been defined by Haufe et al. as “weak asymmetries,” as opposed to “strong asymmetries” caused by true time-lagged, causal relationships (Haufe et al., 2012). In order to be able to make a distinction between weak and strong asymmetries in the interpretation of the estimated Granger causality, Haufe et al. suggest to investigate Granger causality after time-reversal of the signals. The underlying rationale is that time-reversal causes a flip in the dominant direction of interaction only in the presence of strong asymmetries, because the time-reversal operation does not affect the SNR of the individual signals (the AR coefficients of the auto-terms in the univariate AR-models are the same in the forward and backward time directions, and therefore the power spectrum and “self-prediction” is insensitive to time reversal), and thus, will not affect differences in directional interactions if these are caused by weak asymmetries. The time-reversed Granger causality computed from the data we simulated earlier is shown in Figure 7G. Evidently, time-reversal reveals that the dominant Granger causal direction (x2 to x1) remains unchanged, indicative of a weak asymmetry. On the other hand, when actual strong asymmetries underlie a directional difference in Granger causality, time-reversed testing would lead to a change in causal flow in the time-reversed compared to normal directions. In the simulations depicted in Figure 8, a strong asymmetry is created, which is a unidirectional flow from x2 to x1, leading to an asymmetric Granger causality in the appropriate direction (Figure 8D). When the signals are time-reversed, the directionality of Granger causality also flips (Figure 8E), as expected. Therefore, in practice, spurious directional differences in Granger causality that are caused only by differences in SNR may in principle be diagnosed with reversed Granger testing, which strongly reduces the false positive rate of detecting spurious Granger causality, also in the presence of linear mixing (Haufe et al., 2012; Vinck et al., 2015).

Figure 8. Time reversed Granger testing reveals the presence of strong asymmetries. (A) An auto-regressive model specifying a unidirectional system in which x2influences x1 at lags 1 and 2. (B) Power, (C) Coherence, and (D) Granger causality estimates for the forward time direction. (E) Granger causality estimates for the reversed time direction. Note that power and coherence remain the same when estimated from data in either the forward or reversed time directions.

Of course, whenever possible, the SNR problem should be addressed at the experimental level, where care should be taken to minimize noise and ensure that SNR is equalized as much as possible over channels. For example, with EEG or invasive LFP recordings, impedances can be equated as much as possible prior to data recordings, and sensors should be positioned in order to maximize SNR. However, this may not be always possible in practice, for instance in non-invasive recordings (field spread), or due to intrinsic differences in activity of the underlying sources. In such cases, one could resort to stratification techniques (Bosman et al., 2012) where across conditions, the distributional properties of signal power are made as similar as possible, by means of trial subsampling. Alternatively, it has been suggested to use analytic approaches to correct for measurement noise post-hoc. For example, some authors have proposed algorithms to separate signal and noise components in recorded signals using state-space modeling (Nalatore et al., 2007; Sommerlade et al., 2015). This approach assumes that observed time-series represent a combination of the signals-of-interest (modeled as an auto-regressive model) and some amount of superimposed noise, and aims at separating these two quantities. In simulations with uncorrelated, Gaussian noise, this approach largely corrects the problem, yielding Granger causality estimates that are not contaminated by noise. Yet another approach is to use Dynamic Causal Models (DCM), where the generative model is an actual biophysically plausible neuronal model, rather than an autoregressive description of time series (Bastos et al., 2015a). Within the DCM framework, it is possible to make a distinction between the dynamics of the underlying biophysical process (the activation and interaction of the underlying neuronal groups) and the measurements that are made of these processes. Therefore, both correlated and uncorrelated observation noise can be taken into account, and consequently connectivity estimates are more robust to changes in SNR (Friston et al., 2014).

The Common Input Problem

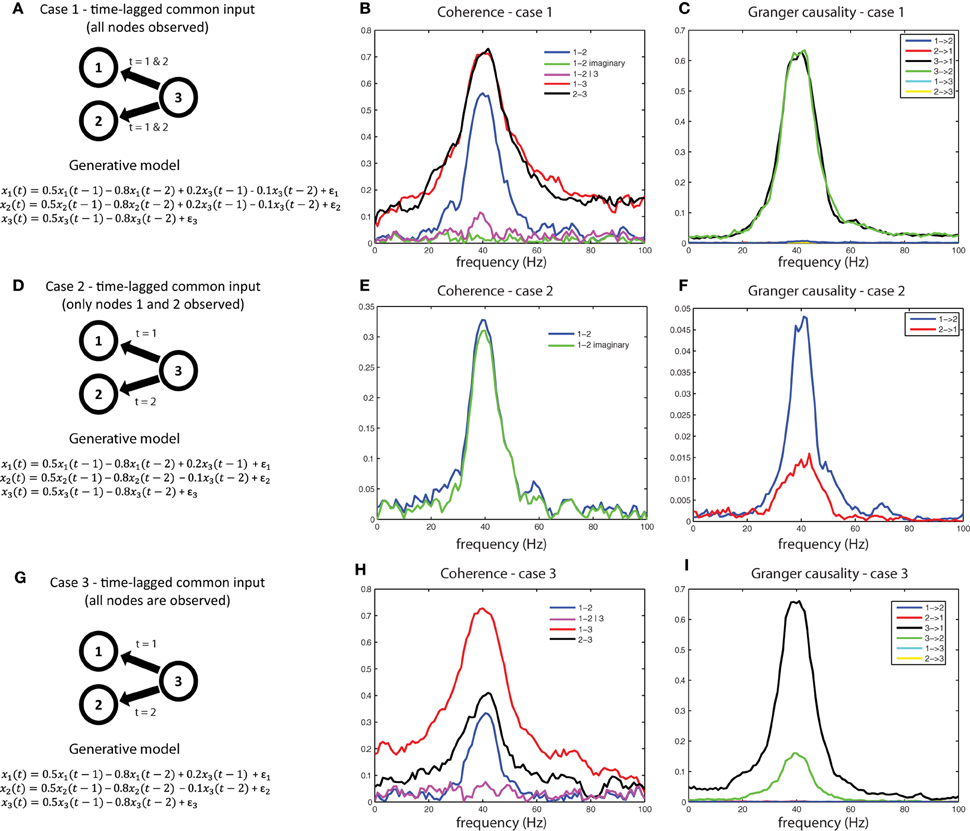

Another interpretational problem in making inferences about estimated interactions from measured data is the difficulty in distinguishing direct from indirect interactions. In other words, if a functional interaction between a pair of signals is detected, this could be caused by common input from a third source, that has not been taken into account. For example, consider the generative model shown in Figure 9A. We used a generative autoregressive model to simulate a connectivity structure between three source (x1, x2, and x3), where x1 and x2 are not directly connected, but receive common input from x3 at time lags 1 and 2. As a consequence of the common input, there will be shared variance between signals x1 and x2. Figure 9B shows the respective coherence spectra for case 1. Note the clear peak in the coherence spectrum between x1 and x2 at 40 Hz (blue trace). If we were to interpret this coherence spectrum as evidence of a direct neuronal interaction, it would be a spurious inference. Therefore, to aid in the interpretation of this coherence spectrum, we need to explicitly consider whether the shared variance that causes the observed coherence is due to common input. If we observe all three nodes, this can be done by considering coherence between x1and x2 after partializing out the contribution of x3(magenta trace in Figure 9B), which reports substantially reduced coherence values between x1and x2, indicative of shared variance (coherence) due to common input. Another metric to look at in this case would be the imaginary part of coherency (green trace), which is also close to zero, indicating that shared variance between x1 and x2 can be accounted for entirely by the zero-lag component, as could be expected by field spread or, in this case, by common input that arrives simultaneously and at the same lags at x1and x2.

Figure 9. A simulation of the common input problem. (A) The auto-regressive form of a model that simulates common input from x3 to x1 and x2. (B) spurious coherence between x1 and x2 caused by common input. Imaginary coherence (green) and partial coherence (purple) are close to zero, indicating an interaction that is both instantaneous and mediated (by x3), respectively. (C) Granger causal estimates detect the common input as distinct from directed interactions between x1 and x2 (which are near zero). (D–F) When time-lagged common input from x3 is present and only x1 and x2 are observed, the data will appear to reflect a time-lagged interaction. This situation can only be interpreted correctly by recording the common driver (G,H), and applying partial coherence (H) or multivariate Granger causality (I).

We can also examine whether the shared variance between x1and x2 is due to effects of their direct interaction or common input by computing Granger causality. Figure 9C shows the Granger causality estimates between x1, x2, and x3 based on a multivariate decomposition of the spectral density matrix, i.e., observing all three nodes. By decomposing the undirected connectivity into directed connectivity using Granger causality, only connections with true underlying coupling (connections x3 x1 and x3

x1 and x3 x2) survive, and the spurious connections (x1

x2) survive, and the spurious connections (x1 x2 and x2

x2 and x2 x1) are suppressed, because the shared variance is absorbed by their instantaneous interactions which are not part of a causal (lagged) interaction. This would also be a situation where conditional or partial Granger causality would be useful for diagnosing the true underlying connectivity between x1, x2, and x3. These simulations can be realized by running the code in script sim_commoninput.pdf.

x1) are suppressed, because the shared variance is absorbed by their instantaneous interactions which are not part of a causal (lagged) interaction. This would also be a situation where conditional or partial Granger causality would be useful for diagnosing the true underlying connectivity between x1, x2, and x3. These simulations can be realized by running the code in script sim_commoninput.pdf.